Research

During his studies as a DPhil student, Ben has been working in the field of visual surveillance, in particular on the problem of obtaining passive coarse gaze estimates. Coarse gaze estimates are estimates of where people are looking that are obtained using the pose of the head rather than eye positions. In surveillance video, heads tend to appear at less than 20 pixels wide. This page is divided into three sections: Head Tracking, Coarse Gaze Estimation and Applications.Head Tracking

For a coarse gaze estimation system to be useful, many people must be tracked simultaneously in real-time and in the presence of frequent occlusions and other distractions such as animals or vehicles. Two tracking systems were developed, both of which were based on two important image measurements. The first measurement was the output of a head detector which was trained using the HOG detection algorithm that has become standard for the purposes of pedestrian detection. Although HOG detection is generally slow, it has become suitable for real-time use due to efficient GPU implementations. The second type of measurement comes from sparse KLT tracking. Although it has been around for a long time, KLT corner tracking still provides an impressive amount of information from very little processing time.

The first tracking system to be developed was based around a Kalman filter, however this proved to be susceptable to data association errors when the HOG detector failed. The second more recent approach, published at CVPR 2011, uses Markov-Chain Monte-Carlo Data Association (MCMCDA) with an accurate error model. MCMCDA allows ambiguities to be resolved more efficiently, but also allows the tracking system to cope with temporary occlusions, take a look at the video for some examples.

Coarse Gaze Estimation

The first research into coarse gaze estimation assumed that head images were no larger than 10 pixels square, which is representative of cameras covering typical street scenes. For a head pose estimator to be effective in real-world situations it must be able to cope with different skin and hair colours as well as wide variations in lighting direction, intensity and colour. Most existing classifiers are susceptible to these variations and require examples with different combinations of lighting conditions and skin/hair colour variations in order to make an accurate classification. An algorithm was developed to specifically address this broblem by learning a model of the skin and hair colours for each new person that is observed, making it largely invariant to lighting and the individual characteristics of the people in the video.

Later research using higher resolution video provided higher resolution images that were up to 20 pixels in diameter. For these images, classifiers based on gradient histograms and colour differences were found to be robust to small errors in the head location. When combined with tracking, both the locations and gaze directions of pedestrians could be estimated in real-time.

The final development (ICCV 2011) was a system that could learn to estimate gaze directions without any manually labelled data. The system made use of unsupervised learning to automatically train randomised tree classifiers by using conditional random fields (CRFs) to model the dynamics of human head movements relative to the walking direction. With no manual labelling required, the system has the potential to benefit from an unlimited number of training examples.

Applications

The focus of an individual's attention often indicates their desired destination whereas mutual attention between people indicates familiarity, and any single object or person receiving attention from a large number of people is likely to be worthy of further investigation. To represent this, large scale gaze maps were developed in which each square represents the amount of attention received by one square metre on the ground. Three experiments were performed to demontrate different uses of the complete system.

Experiment 1: Background Attention

In the first experiment pedestrians were tracked in a busy town centre street. Up to thirty pedestrians were tracked simultaneously and had their gaze directions estimated. A gaze map was built up over the full twenty-two minute video sequence, covering approximately 2200 people. When projected, the attention map identifies the shops on the left of the view as a common subject of attention.

Experiment 2: Artificial Stimulus

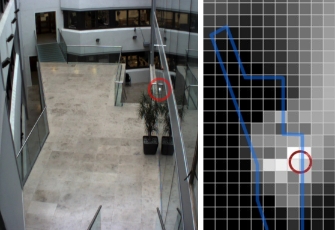

The purpose of the second experiment was to artificially draw the attention of pedestrians to a particular location. To achieve this, a bright light was attached to the wall at the location indicated by the red circle in the above images. The blue lines show the outline of the floor. For this experiment the attention map was generated by taking the difference between the attention received both with and without the light stimulus to correct for the stimuli normally present in the scene. A total of 200 minutes of video were analysed and 477 people were tracked.

Experiment 3: Transient Object

The aim of the third experiment was to identify a transient subject of attention. To resolve the ambiguities caused by not knowing the distance between pedestrians and the subject of their attention, the gaze estimates from both people were multiplied and combined over a sliding window of three frames. The resulting intersection correctly identifies the car as the subject of attention when projected back onto the video.

Camera Control

In systems controlling dynamic cameras, a pose estimation from a low resolution head image can be used to determine whether or not a close-up from a dynamic camera would provide a face image that is suitable for identification. A joint project with Eric Sommerlade used real-time gaze estimates to optimally control active cameras to obtain close-up face images.